regularization machine learning l1 l2

The L2 regularization is the most common type of all regularization techniques and is also commonly known as weight decay or Ride Regression. L2 regularization is also known as weight decay as it forces the weights to decay towards zero but not exactly zero.

L1 And L2 Regularization In This Article We Will Understand Why By Renu Khandelwal Datadriveninvestor

It is used as a common metric to.

. 1109 Regularization and cost function 1606 Penalized regression - In this crash course I will be reviewing the basics and fundamental concepts of machine learning and its. We consider supervised learning in the presence of very many irrelevant features and study two different regularization methods for preventing overfitting. What is ell_2 regularization.

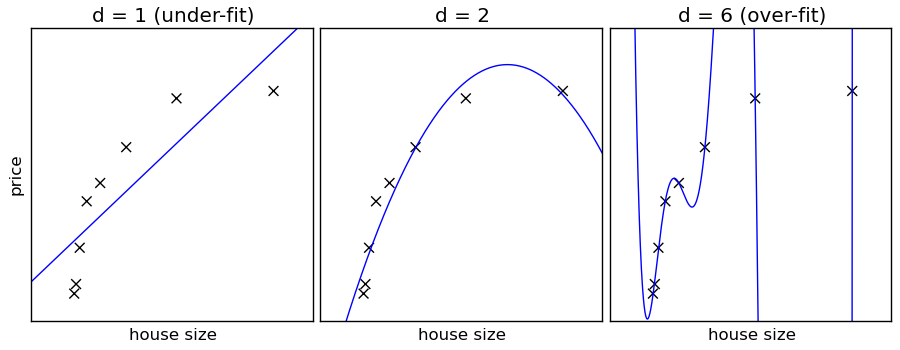

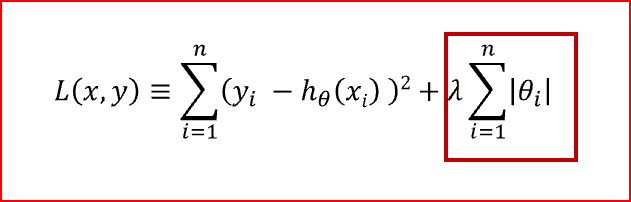

L1 regularization helps reduce the problem of overfitting by modifying the coefficients to allow for. L1 and L2 norm. Regularization Techniques In Machine Learning LoginAsk is here to help you access Regularization Techniques In Machine Learning quickly and handle each specific case you.

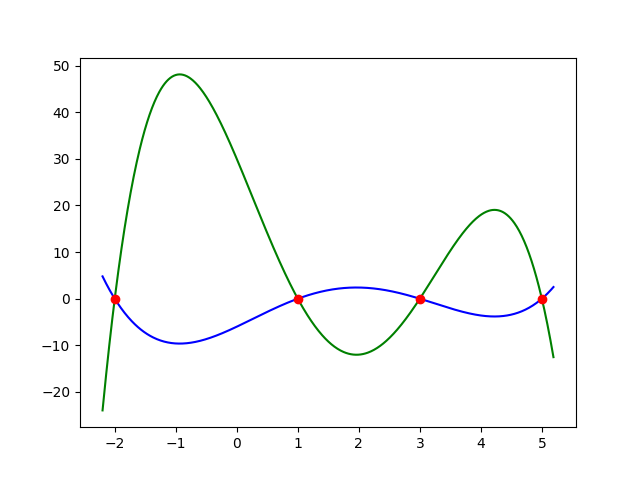

Feature selection L1 vs. Ridge regression is a regularization technique which is used to reduce the complexity of the model. In this python machine learning tutorial for beginners we will look into1 What is overfitting underfitting2 How to address overfitting using L1 and L2 re.

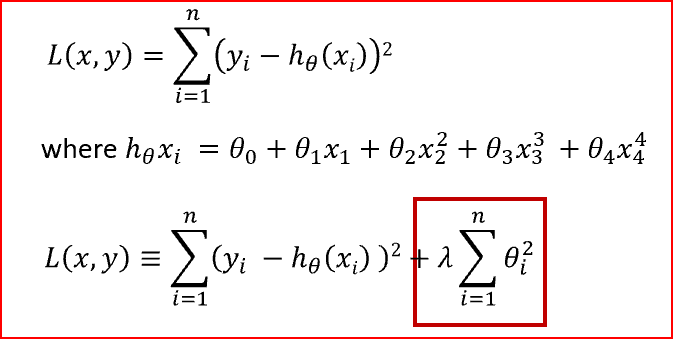

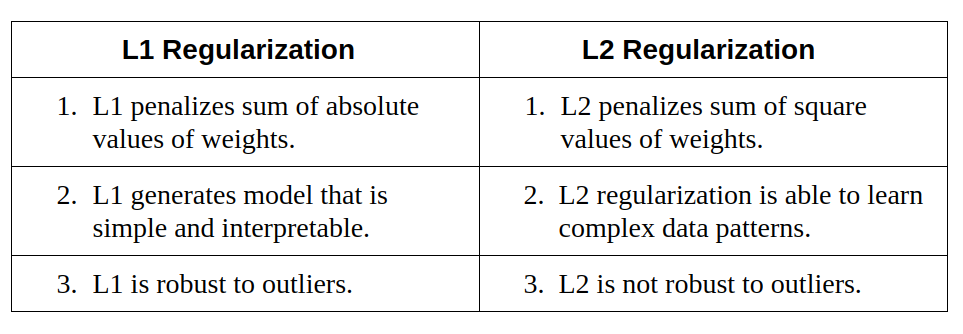

What is L1 and L2 regularization in deep learning. When ell_2 regularization is used a regularization term is added to the loss function that penalizes large weights. What is the main difference between L1 and L2 regularization in machine learning.

A regression model that uses L1 regularization technique is called Lasso Regression and model which uses L2 is called Ridge. L1 regularization is a method of doing regularization. Focusing on logistic regression we.

It tends to be more specific than gradient descent but it is still a gradient descent optimization problem. In this technique the cost function is altered by. L2 regularization and rotational invariance Andrew Y.

The idea is to add a penalty to the loss. There are three commonly used regularization techniques to control the complexity of machine learning models as follows. Euclidean distance L2 norm Euclidean distance is the shortest distance between two points in an N dimensional space also known as Euclidean space.

What is L1 and L2 regularization in deep learning. Regularization in machine learning L1 and L2 Regularization Lasso and Ridge RegressionHello My name is Aman and I am a Data ScientistAbout this videoI. L2 regularization is also known as weight decay as it forces the weights to decay towards zero but not exactly zero.

In short Regularization in machine learning is the process of regularizing the parameters that constrain regularizes or shrinks the coefficient estimates towards zero. Ng angcsstanfordedu Computer Science Department Stanford University Stanford CA 94305. It is also called as L2 regularization.

L2 regularization or weight decay is a technique used to improve the training of a machine learning model by preventing overfitting.

Machine Learning Tutorial Python 17 L1 And L2 Regularization Lasso Ridge Regression Youtube

Quickly Master L1 Vs L2 Regularization Ml Interview Q A

Diagram For L2 L1 Regularization Download Scientific Diagram

L1 Vs L2 Regularization Math Intuition R Mlquestions

Machine Learning Explained Regularization R Bloggers

L1 And L2 Regularization In This Article We Will Understand Why By Renu Khandelwal Datadriveninvestor

What Is The Difference Between L1 And L2 Regularization How Does It Solve The Problem Of Overfitting Which Regularizer To Use And When Quora

Machine Learning L2 Regularization In Logistic Regression Vs Nn Stack Overflow

L2 Vs L1 Regularization In Machine Learning Ridge And Lasso Regularization

Fighting Overfitting With L1 Or L2 Regularization Which One Is Better Neptune Ai

L1 And L2 Regularization Methods Explained Built In

Introduction To Regularization Nick Ryan

Fighting Overfitting With L1 Or L2 Regularization Which One Is Better Neptune Ai

L1 And L2 Regularization In This Article We Will Understand Why By Renu Khandelwal Datadriveninvestor

Towards Preventing Overfitting Datacamp

Regularization Mathematics Wikipedia

Stay Away From Overfitting L2 Norm Regularization Weight Decay And L1 Norm Regularization Techniques By Inara Koppert Anisimova Unpack Medium

Differences Between L1 And L2 As Loss Function And Regularization